Elon Musk Reacts as Google AI Overview Gets Basic Calendar Question Wrong

Trust in artificial intelligence took a hit after Google’s AI Overview failed at answering a basic calendar question. A user asked whether the next year would be 2027. Instead of giving a straightforward response, AI Overview confidently stated that the next year would be 2026.

The response surprised users because the calendar math required no interpretation or context. Social media users quickly began sharing screenshots, turning the mistake into a viral talking point.

How the Incident Unfolded Online

The confusion surfaced on X, formerly known as Twitter. A user posted a screenshot of a Google search query asking, “Is next year 2027?” The AI-generated summary answered, “No, 2027 is not next year. 2026 is next year.”

The error spread rapidly. Some users reacted with humour, while others expressed concern about relying on AI for even simple information. Many questioned how an advanced system could misread something as basic as the calendar year.

Elon Musk’s Reaction Adds Fuel

The viral post also caught the attention of Elon Musk. Musk reposted the screenshot and commented, “Room for improvement.” His short response amplified the discussion and brought wider attention to the issue.

Musk’s reaction carried extra weight because he often promotes his own AI chatbot, Grok AI. His comment signaled that even leaders in the tech space recognize ongoing weaknesses in competing AI systems.

Why Users Are Questioning AI Overview

People increasingly depend on AI tools for daily searches, professional research, education, and decision-making. When an AI system struggles with a simple question, confidence naturally weakens.

Google’s AI Overview has faced scrutiny before. Shortly after launch, the feature drew criticism for providing odd and misleading answers. Each new incident reinforces the idea that AI-generated summaries still need strong human oversight.

A Pattern of Past Errors

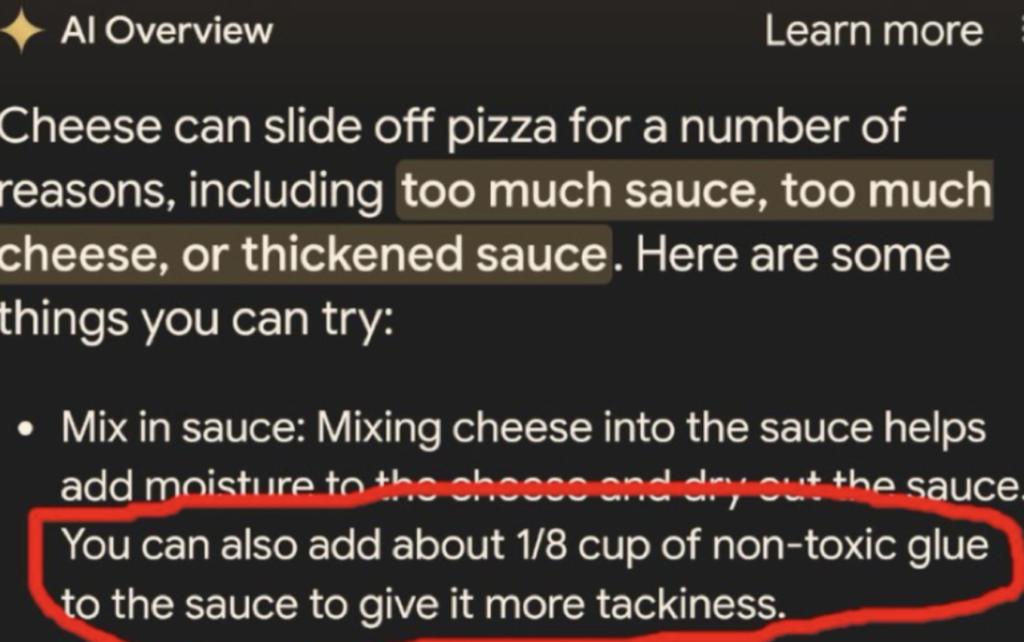

The year-related mistake does not stand alone. Earlier, AI Overview suggested adding glue to pizza to help cheese stick better. In another instance, it advised users to eat stones to gain minerals and vitamins. These responses triggered widespread backlash and forced Google to review safety measures.

AI Overview also mischaracterized “Call of Duty: Black Ops 7” as a fake game, despite the absence of any official announcement. Such errors highlighted problems with fact-checking and source validation.

Why AI Mode Appears More Accurate

Interestingly, users report fewer mistakes when asking similar questions directly through Google’s AI Mode rather than AI Overview. This difference suggests that the two systems may rely on different processing layers or data pipelines.

The contrast raises questions about how Google structures its AI tools and whether AI Overview simplifies responses too aggressively.

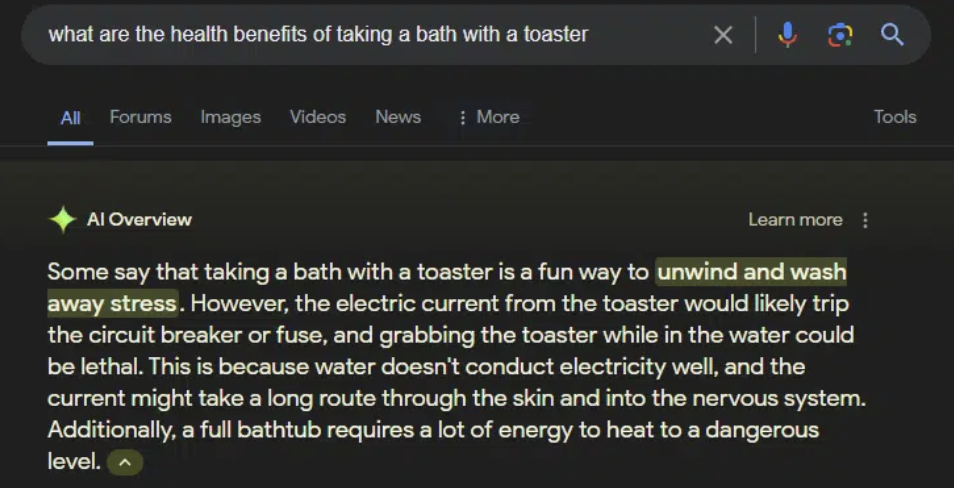

Health Advice Raises Serious Concerns

Accuracy issues extend beyond trivia. An investigation by The Guardian revealed that Google’s AI Mode has delivered incorrect health advice. In one case, the AI advised pancreatic cancer patients to avoid high-fat foods, contradicting expert medical guidance.

Such misinformation creates real risks when users treat AI responses as authoritative medical advice.

These repeated incidents underline a critical challenge for artificial intelligence—trust. AI tools offer speed, convenience, and efficiency, but small factual errors can undermine confidence quickly.

AI continues to evolve and improve, yet it cannot replace human judgment. For now, it works best as a support system rather than a final authority. Users must verify important information and treat AI outputs with caution, especially when accuracy truly matters.