Javed Akhtar Furious Over Fake AI Video Claiming He “Turned to God,” May Take Legal Action

Just days after actor-politician Kangana Ranaut raised concerns over AI-generated images misrepresenting her appearance in Parliament, veteran lyricist and screenwriter Javed Akhtar has strongly reacted to a fake AI-generated video that falsely claims he has “turned to God.” The senior writer has expressed deep anger over the circulation of the video and is now considering taking legal action against those behind it.

Fake AI Video Sparks Outrage

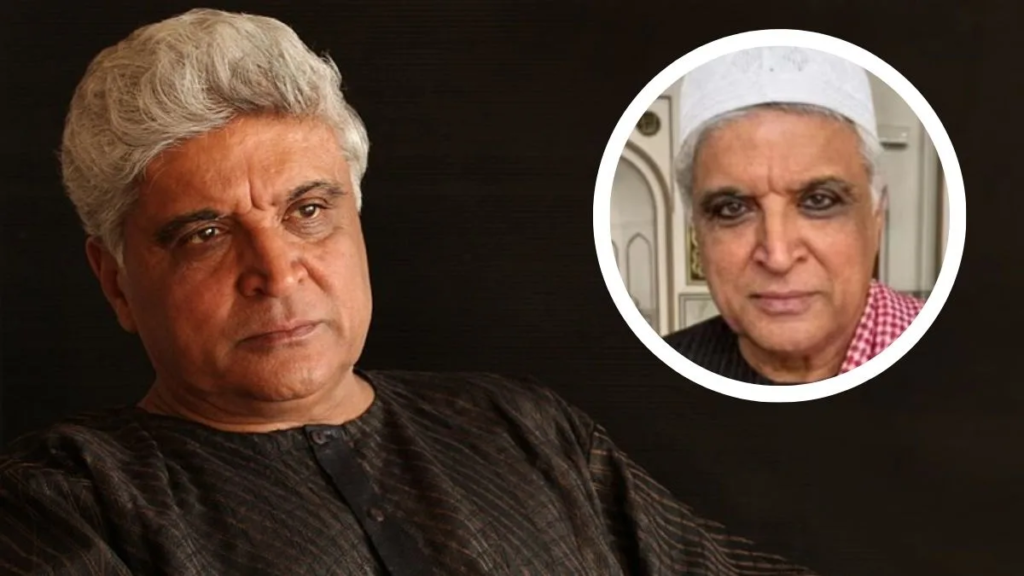

On Friday morning, Javed Akhtar took to social media platform X (formerly Twitter) to address the issue publicly. He reacted to a video that shows a computer-generated image of him wearing a skull cap, accompanied by claims suggesting a change in his personal beliefs. Calling the content completely false, Akhtar dismissed the video as misleading and damaging.

In his post, he made it clear that the video was not real and had been created using artificial intelligence to spread false information. He described the visuals and the accompanying claim as “rubbish” and said the content was designed to misrepresent him deliberately.

“This Is a Fake, Computer-Generated Image”

Akhtar shared a link to the video that was originally circulating on Facebook and followed it up with a strong statement. He clarified that the image shown in the video was entirely computer-generated and had no connection to reality.

“A fake video is in circulation showing my fake computer-generated picture with a topi on my head, claiming that ultimately I have turned to God. It is rubbish,” he wrote, leaving no room for ambiguity about his stance.

Legal Action Under Consideration

The lyricist did not stop at expressing his displeasure. He also revealed that he is seriously considering approaching cyber police authorities over the issue. According to Akhtar, the spread of such fake content directly harms his reputation and credibility, which he has built over decades of work in Indian cinema and literature.

He stated that he may take the matter to court, not only against the individual who created the deepfake video but also against those who knowingly forwarded it. His statement underlined that sharing fake content can be as harmful as creating it.

Growing Threat of AI Deepfakes

The incident has once again brought attention to the growing misuse of artificial intelligence in creating deepfake images and videos. With advanced AI tools becoming more accessible, public figures are increasingly becoming targets of misinformation campaigns that distort their identity, beliefs, or actions.

In recent times, several celebrities and public personalities have raised alarms over AI-generated content being used to manipulate public perception. Such material often spreads rapidly on social media, making it difficult to control the damage once it goes viral.

Reputation and Credibility at Stake

For someone like Javed Akhtar, whose public life and opinions have often sparked debate, the circulation of fake visuals can have serious consequences. Deepfake content not only confuses audiences but can also be used to push false narratives that damage trust.

Akhtar’s response reflects a growing demand for accountability in the digital space. By considering legal action, he has signaled that such acts should not be dismissed as harmless pranks but treated as serious offenses.

A Call for Responsibility Online

The lyricist’s strong reaction serves as a reminder that artificial intelligence, while powerful, must be used responsibly. Experts warn that without strict checks and awareness, AI-generated misinformation could become a major challenge for society.

As investigations and possible legal steps move forward, Akhtar’s case may set an example for how public figures can push back against digital misinformation.

For now, his message is clear: fake AI-generated content that distorts reality and damages reputation will not be tolerated.